Background

In the current digital world, the negative and positive news has a big impact on stock prices. The firm wanted to build a model using AI/ML to monitor and analyze social media and determine overall sentiment for making investment decisions.

Challenge

With the growing amount of information being dumped on the internet, resulting in high information noise, firm needs the capability to differentiate signal from noise. In order to transform the information present on Twitter into actionable insights, the firm needed an effective, easy-to-use, one-stop solution using machine learning technologies.

Solution

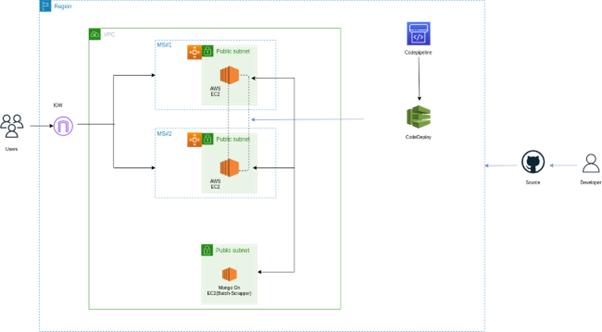

The investment firm leveraged Digital Alpha’s decision support platform on AWS to build the solution. There were two steps to developing the algorithms. First, to web scrape Twitter to download top tweets based on specific keywords, companies, and industry expert handles. The second algorithm analyzes the tweets’ content, converting them into social sentiment indexes.

The decision Support Platform is used to analyze the tweets from Twitter. This app has the following two parts – App and Scrapper. The app is the UI that shows the data in an interactive format, while the scraper is used to fetch new tweets every day. The tweets are saved in the database. The application is built using ReactJS and Django.

Infrastructure Deployment

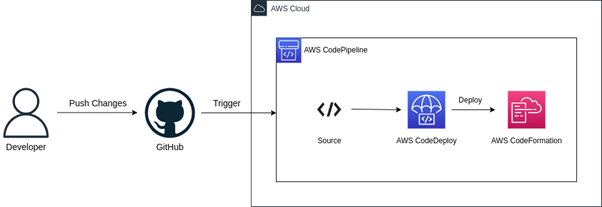

This infrastructure is deployed in an AWS cloudformation stack. This cloudformation template can be modified and redeployed using AWS codepipeline. The developer first pushes the changes to the GitHub repository, then the AWS codepipeline gets triggered. This pipeline then uses AWS CodeDeploy to deploy the updated cloudformation stack.

Application Deployment

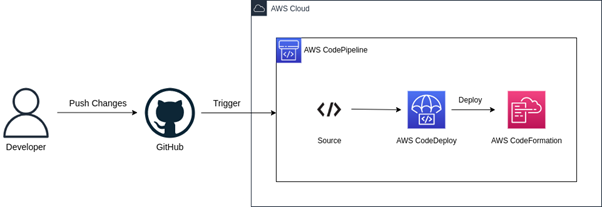

The application deployment is done using AWS CodePipeline. It automates the deployment process on every push to the repository. GitHub Actions detect which pipeline to trigger based on files changed in the commit.

The app pipeline has the following steps –

- Code is pushed to the GitHub repository

- GitHub action detects the new changes and triggers the AWS CodePipeline

- The first step of the pipeline is to fetch the source code from the GitHub repository

- In the second step, the source code artifact is used by AWS CodeBuild to build docker images using the buildspec.yml file.

- In the last step, the AWS CodeDeploy updates the deployed instance. The new files get copied to the EC2 instance, and the appspec.yml file is used to deploy new docker images.

Scraper Deployment

The scraper files are also present in the same repository as the application. GitHub actions are used to detect which pipeline should be triggered based on the changes in the new commit.

The scraper pipeline has the following steps –

- The first step of the pipeline is to fetch the source code from the GitHub repository

- In the last step, the AWS CodeDeploy is used to copy new files to the EC2 instance using the appspec.yml file.

After that, a cron job is created to run the scraper code automatically.

The cronjob can be created using the following command –

- Run “sudo crontab -e”

- Add the crontab command in the editor. E.g. “@daily echo ‘Hello World’”

This command will create a crontab on a Linux machine as a root user.

Benefits

Sentimental Analysis provides the ability to analyze the opinions of people about specific incidents, products or companies. Prediction of the stock market is never easy and requires efficient models and platforms to perform effective analysis.