Today’s artificial intelligence systems can write code, generate images, and engage in complex conversations, yet most AI chatbots still struggle with basic tasks like maintaining context or taking meaningful actions based on user requests. The gap between simple chatbots and truly capable AI agents lies in their ability to combine reasoning, natural conversation, and real-world actions. While Large Language Models (LLMs) have revolutionized natural language processing, building effective AI agents requires careful integration of multiple components – from decision-making frameworks to action execution systems.

This comprehensive guide explores the essential components and implementation strategies for building advanced AI agents. We’ll examine how to combine LLMs with reasoning capabilities, develop sophisticated dialog systems, and create robust action frameworks. Whether you’re developing virtual assistants, customer service agents, or autonomous systems, you’ll learn practical approaches to create AI agents that can think, speak, and act effectively.

Understanding Modern Conversational AI

Modern conversational AI architecture represents a sophisticated interplay of multiple components working in harmony to create intelligent, interactive systems. Understanding this architecture is crucial for developing effective AI agents that can reason, communicate, and act meaningfully.

Core Components of AI Agents

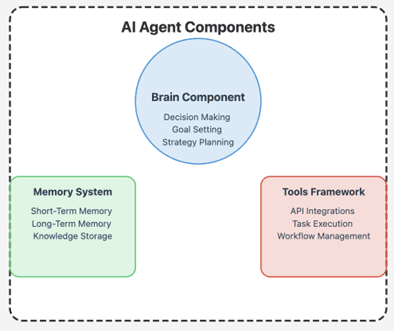

The foundation of modern AI agents rests on several essential components that work together seamlessly:

- Brain Component: Acts as the central decision-making unit, coordinating between other components and defining the agent’s goals and planning strategies

- Memory System: Comprises both short-term memory for immediate reasoning and long-term memory for storing conversation histories

- Tools Framework: Specialized APIs and workflows that enable specific task execution

- Planning Module: Handles task decomposition and execution strategy.

Role of Large Language Models

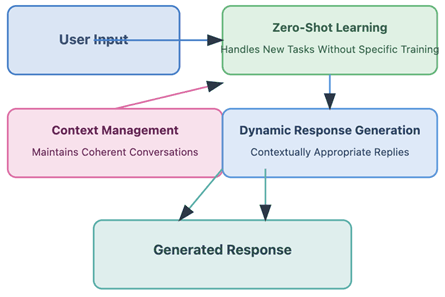

Large Language Models serve as the cognitive engine of modern conversational AI systems. These models bring unprecedented capabilities in natural language understanding and generation, enabling AI agents to process and generate human-like text with remarkable accuracy. LLMs provide the foundation for sophisticated features like:

- Zero-Shot Learning: Ability to handle new tasks without specific training.

- Context Management: Maintaining coherent conversations across multiple turns.

- Dynamic Response Generation: Creating contextually appropriate and natural-sounding replies.

Integration Patterns and Approaches

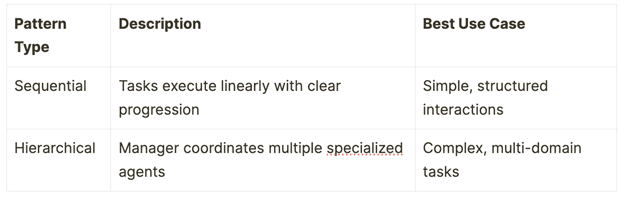

The architecture of conversational AI systems typically follows two primary integration patterns:

These patterns are enhanced through natural language processing components that handle input generation, analysis, and dialog management. The system employs sophisticated context management strategies to maintain coherent conversations while orchestrating various services and APIs for task execution.

The integration framework determines how external services connect with the AI agent, managing API interactions, data access, and service orchestration. This framework ensures smooth communication between different components while maintaining security and performance standards.

Implementing the Reasoning Layer

The implementation of effective reasoning capabilities marks the crucial difference between simple chatbots and sophisticated AI agents. By combining multiple reasoning approaches, modern AI systems can process information more effectively and make better decisions.

Symbolic and Neural Reasoning Methods

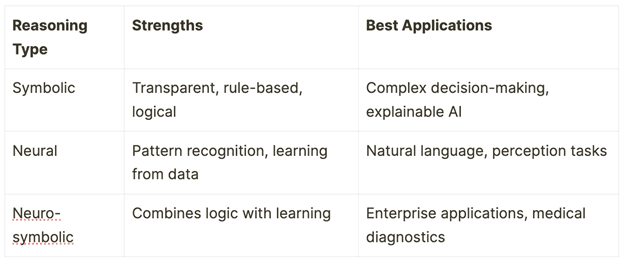

The integration of symbolic and neural reasoning creates a powerful hybrid approach called neuro-symbolic AI. This combination leverages the strengths of both methodologies:

Context Management Strategies

Effective context management relies on sophisticated memory architectures that maintain conversation coherence and relevance. Key strategies include:

1. Short-term Memory Management

- Conversation history tracking

- State representation

- Dynamic context window utilization

2. Long-term Memory Integration

- Knowledge base development

- User profile maintenance

- External storage systems

Decision-Making Frameworks

The implementation of decision-making frameworks enables AI agents to make informed choices based on available information and objectives. Modern systems typically employ a combination of approaches:

1. Rational Decision Model: Implements logical decision-making by evaluating all possible alternatives systematically. This framework excels in situations where complete information is available and optimal choices are required.

2. Bounded Rationality: Acknowledges real-world constraints and enables AI agents to make “good enough” decisions when facing limited information or time constraints.

3. Data-Driven Frameworks: Utilize structured approaches for decision-making, incorporating decision trees and matrices for evaluating multiple options based on defined criteria.

The effectiveness of these frameworks is enhanced through context-aware disambiguation techniques and sophisticated error handling mechanisms. By implementing robust decision validation protocols, AI agents can maintain reliability while adapting to complex scenarios.

Developing Advanced Dialog Capabilities

Building upon the foundational architecture and reasoning capabilities, the development of advanced dialog capabilities represents the core interface between AI agents and users. These capabilities transform raw language processing into meaningful, context-aware conversations.

Natural Language Understanding Components

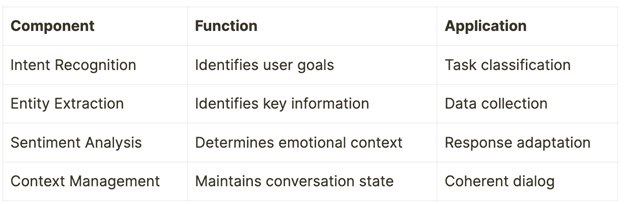

At the heart of dialog capabilities lies Natural Language Processing (NLP), which combines several sophisticated components. The primary elements include Natural Language Understanding (NLU) for comprehending user inputs and Natural Language Inference (NLI) for determining logical relationships between statements.

Response Generation Mechanisms

The Natural Language Generation (NLG) process transforms structured data into human-readable responses through a sophisticated seven-step pipeline:

- Content Determination: Selecting relevant information based on context

- Discourse Planning: Organizing response structure

- Sentence Aggregation: Combining related information

- Lexicalization: Choosing appropriate vocabulary

- Reference Expression: Managing pronouns and references

- Syntactic Realization: Applying grammar rules

- Orthographic Realization: Formatting final text

Conversation Flow Management

Modern AI agents employ advanced conversation flow management techniques to maintain coherent and purposeful dialogs. The system tracks conversation states, manages multiple dialog sessions, and ensures appropriate response selection based on context.

Key Features of Flow Management:

- Visual representation of user journeys

- Tracking of commonly used tasks and utterances

- Analysis of conversation patterns

- Management of dropout points

- Multi-language support across 96 languages

The integration of these components creates a robust dialog system capable of understanding complex queries, generating contextually appropriate responses, and maintaining coherent conversations across multiple turns. This sophisticated approach enables AI agents to handle nuanced interactions while providing consistent and reliable user experiences.

Building the Action Execution Framework

The execution framework serves as the bridge between an AI agent’s decision-making capabilities and real-world actions. This critical component transforms abstract plans into concrete operations, enabling AI agents to interact meaningfully with external systems and services.

Task Planning and Decomposition

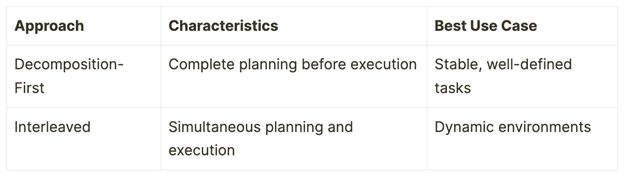

Task decomposition forms the foundation of efficient action execution in AI systems. The framework employs two primary approaches for breaking down complex tasks:

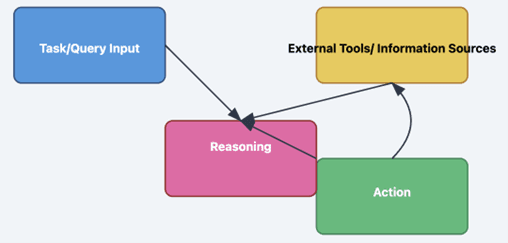

The ReAct (Reasoning and Acting) pattern enhances this framework by combining reasoning traces with actions in a continuous feedback loop. This integration enables AI agents to think critically about their actions while adjusting strategies based on immediate feedback.

ReAct Agent Workflow

A ReAct (Reasoning and Action) agent is an advanced AI framework that dynamically combines reasoning and action-taking capabilities. Unlike traditional task-solving approaches, it integrates three core components: task input, reasoning, and action execution. The agent continuously cycles through understanding a problem, strategically planning solutions, and taking concrete steps to address it. By leveraging external tools and information sources, the agent can flexibly adapt its approach, breaking down complex tasks into manageable actions.

This iterative process allows the AI to reason about challenges, retrieve relevant information, execute actions, and refine its strategy in real-time, making problem-solving more intelligent and responsive.

API Integration and Service Orchestration

The orchestration layer serves as the backbone of the action execution framework, managing interactions between various components of the application architecture. Key elements of successful service orchestration include:

Dynamic Resource Management

- Automated scaling based on demand

- Efficient resource allocation

- Load balancing across services

The framework utilizes API contracts to establish secure connections between services, implementing authentication layers to protect sensitive information while maintaining efficient data flow. This orchestration ensures cohesive workflow management across multiple services and environments.

Error Handling and Recovery

Robust error handling mechanisms are essential for maintaining system reliability. The framework implements a comprehensive error management strategy through:

1. Proactive Monitoring

- Real-time analysis of system performance

- Anomaly detection and prevention

- Automated fault detection

2. Recovery Mechanisms

- Automatic retry mechanisms for failed operations

- Graceful degradation protocols

- State management during failures

The implementation of Try/Catch patterns enables the system to handle errors gracefully while maintaining operational continuity. This approach allows AI agents to recover from failures without compromising the overall system integrity or user experience.

By leveraging real-time data analysis and machine learning techniques, the framework continuously improves its ability to detect and resolve issues swiftly. This adaptive approach ensures that AI agents can maintain reliable performance while handling complex tasks in dynamic environments.

Performance Optimization and Scaling

Optimizing performance in conversational AI systems requires a delicate balance between speed, efficiency, and reliability. As these systems scale to handle increasing workloads, maintaining responsive interactions while managing computational resources becomes crucial.

Latency Reduction Techniques

In real-time communications, latency significantly impacts conversation quality and user experience. The International Telecommunication Union recommends a one-way latency of 150ms for conversational use cases, though this presents challenges in RAG-based architectures.

Key strategies for reducing latency include:

- Streaming Implementation: Utilize real-time streaming instead of batch processing for Speech-to-Text services

- Vector Search Optimization: Implement shorter embedding vectors and quick rescoring algorithms

- Response Generation: Focus on Time to First Token (TTFT) optimization

- Context Caching: Store frequently accessed information locally

The implementation of these techniques can significantly reduce perceived latency, particularly in interactive applications like virtual assistants and real-time content generation systems.

Resource Optimization Strategies

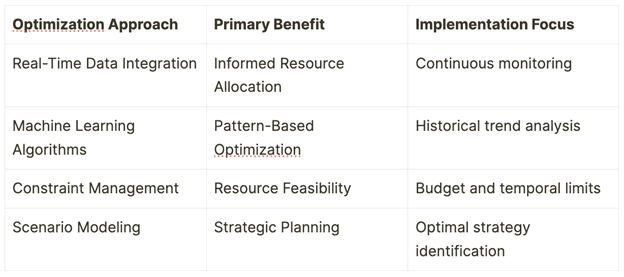

Resource optimization in AI systems focuses on maximizing efficiency while maintaining performance. The framework employs dynamic resource allocation that adapts based on evolving requirements and real-time data integration.

Token efficiency plays a crucial role in resource optimization. Studies show that optimized models can achieve equivalent performance while using up to 88.5% fewer tokens, significantly reducing computational overhead and improving response times.

Load Balancing and Distribution

Modern AI data centers face unique challenges with “Elephant flows” – large amounts of RDMA traffic typically generated by GPU clusters. Effective load balancing becomes critical in managing these high-volume data transfers.

Load balancing implementations utilize two primary approaches:

1. Static Hash-Based Distribution

- Packet header analysis

- Flow table management

- Consistent routing paths

2. Dynamic Load Balancing

- Link utilization monitoring

- Queue management

- Quality band assignment

- Adaptive routing decisions

The system employs sophisticated algorithms to monitor link utilization and queue status, automatically adjusting distribution patterns to prevent network congestion and maintain optimal performance. This dynamic approach ensures efficient bandwidth utilization even during low entropy workloads.

Performance monitoring integrates continuous feedback loops that analyze conversation transcripts, popular topics, and interaction patterns. This data-driven approach enables periodic reviews and improvements, ensuring the system maintains optimal performance as it scales across multiple platforms and channels.

Conclusion

Building advanced conversational AI agents demands careful integration of multiple sophisticated components – from reasoning engines to action frameworks. This comprehensive exploration has covered the essential elements needed to create AI systems that think, communicate, and act effectively. The journey through modern AI architecture revealed how brain components, memory systems, and planning modules work together to enable intelligent decision-making. Large Language Models serve as powerful cognitive engines, while hybrid reasoning approaches combine symbolic and neural methods for enhanced problem-solving capabilities.

Advanced dialog systems transform these technical capabilities into meaningful conversations through sophisticated NLP components and response generation mechanisms. The action execution framework bridges the gap between decisions and real-world impact, supported by robust error handling and recovery systems. Performance optimization emerges as a critical factor in scaling these systems, with specific focus on latency reduction, resource management, and load balancing. These optimization strategies ensure AI agents maintain their effectiveness even as demand grows.