Function calling has revolutionized the landscape of Large Language Models (LLMs), marking a significant leap forward in their capabilities. This advancement enables LLMs to interact with external tools and APIs, expanding their utility beyond mere text generation. The integration of function calling has opened up new possibilities for creating more powerful and versatile AI systems, capable of performing complex tasks and providing more accurate and context-aware responses.

This article delves into the evolution of LLMs and their journey towards function calling capabilities. It explores the key components that make up function calling systems and examines how they work in tandem with other AI techniques. By understanding these aspects, readers will gain insights into the potential applications of function calling in LLMs and its impact on the future of AI-driven solutions across various domains.

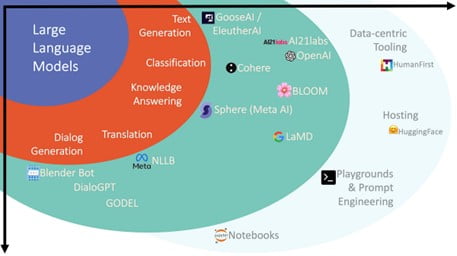

The Evolution of LLMs: From Text Generation to Function Calling

Traditional LLM Capabilities

Large Language Models (LLMs) have come a long way since their inception. At their core, LLMs are artificial intelligence models designed to understand and generate human language using complex algorithms . These models are trained on massive amounts of data, primarily from the internet, enabling them to generate coherent and meaningful sentences.

The fundamental job of a language model is to calculate the probability of a word following a given input in a sentence . For example, when presented with “The sky is ____,” the most likely answer would be “blue” . This process forms the basis of pre-trained language models, which can be fine-tuned for various practical applications, such as translation or building expertise in specific knowledge domains like law or medicine.

The Need for Enhanced Functionality

As LLMs evolved, their capabilities expanded beyond simple text generation. GPT-1 set the stage by performing basic tasks like answering questions. GPT-2 significantly increased in size, with more than ten times the parameters, allowing it to produce human-like text and perform certain tasks automatically . The introduction of GPT-3 marked a significant milestone, as it brought problem-solving capabilities to the public.

However, despite these advancements, LLMs still faced limitations. They lacked access to up-to-date information, as their training data was fixed at a certain point in time . Additionally, the reliability of LLMs was not always optimal, with issues such as hallucinations – producing outputs that are coherent and grammatically correct but factually incorrect or nonsensical .

Emergence of Function Calling

To address these limitations and enhance the capabilities of LLMs, the concept of function calling emerged. Function calling is a transformative feature that significantly broadens the capabilities of these frontier models . It allows AI models to go beyond basic text generation and language understanding by interacting with and executing external functions.

Function calling enables AI systems to take actions through external function calls, driven by conversational prompts . This capability transforms AI from simple text generators into dynamic assistants, paving the way for the next wave of intelligent applications . It facilitates access to real-time data, database queries, device control, and more, making AI systems more versatile, responsive, adaptive, and interactive .

The integration of function calling with other techniques, such as Retrieval Augmented Generation (RAG), has led to more sophisticated AI applications . For instance, RAG-equipped systems can use retrieved content to guide function calls, resulting in more adaptable AI assistants.

This evolution has opened up new possibilities for creating powerful and versatile AI systems capable of performing complex tasks and providing more accurate and context-aware responses. As AI continues to progress, function calling will enable models to orchestrate increasingly complex chains of external services through natural language instructions, making it valuable across many real-world applications.

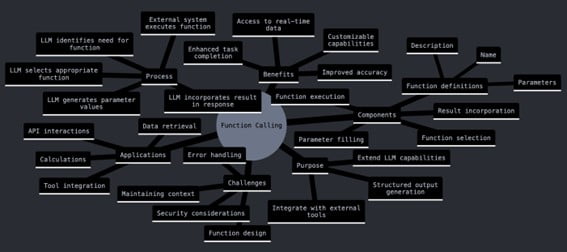

Key Components of Function Calling Systems

Function calling systems in Large Language Models (LLMs) consist of several key components that work together to enable effective interaction with external tools and APIs. These components allow LLMs to extend their capabilities beyond text generation, making them more versatile and powerful in various applications.

Function Definitions and Schemas

At the core of function calling systems are the function definitions and schemas. These provide the LLM with a structured description of available functions or tools . Function definitions typically include:

- Function name

- Description of its purpose

- Required parameters and their types

- Expected output format

Different LLM providers use varying formats for function definitions. For instance, OpenAI employs a JSON schema aligned with the Open API JSON schema . Mistral and Anthropic use similar approaches, while Cohere opts for a leaner definition focusing on parameter definitions.

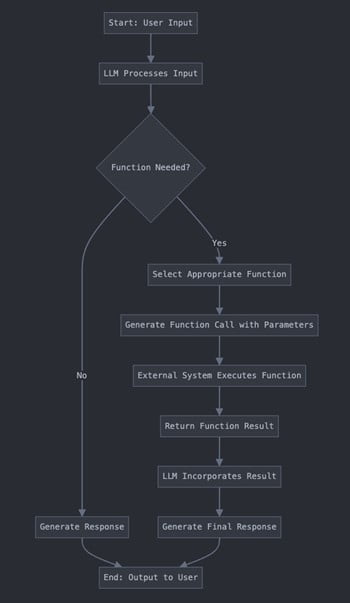

Here’s a brief explanation of each step:

- Start: User Input – The process begins with the user providing input to the LLM.

- LLM Processes Input – The LLM analyzes and interprets the user’s input.

- Function Needed? – The LLM determines whether a function call is necessary to respond to the user’s input.

If No Function Needed:

- Generate Response – The LLM generates a response based solely on its training data.

If Function Needed:

- Select Appropriate Function – The LLM chooses the most suitable function from available options.

- Generate Function Call with Parameters – The LLM prepares the function call, including necessary parameters.

- External System Executes Function – The function is executed outside the LLM, potentially accessing external data or tools.

- Return Function Result – The result of the function execution is sent back to the LLM.

- LLM Incorporates Result – The LLM integrates the function result into its processing.

- Generate Final Response – The LLM creates a response that incorporates the function result.

- End: Output to User – The final response is presented to the user.

This workflow demonstrates how function calling allows LLMs to extend their capabilities by interacting with external systems and data sources when needed.

Natural Language Understanding for Function Selection

LLMs with function calling capabilities use natural language processing to analyze user prompts and determine when external function execution is necessary . This process involves:

- Interpreting the user’s intent

- Matching the intent to available functions

- Selecting the most appropriate function for the task

Not all LLMs possess this ability; only those specifically trained or fine-tuned can perform function calling . The Berkeley Function-Calling Leaderboard provides insights into how different LLMs perform across various programming languages and API scenarios.

Execution and Response Generation

Once a function has been selected, the LLM generates a structured response, typically in JSON format, containing the function name and necessary arguments . This response is then processed by an external application, which:

- Parses the LLM’s response

- Executes the specified function with the provided arguments

- Returns the function’s output to the LLM

The LLM then incorporates this output into its final response, providing a coherent and contextually relevant answer to the user’s query . This process often involves two separate invocations of the LLM: one to map the prompt to a function call, and another to generate the final response based on the function’s output .

By combining these components, function calling systems enable LLMs to interact with external tools and APIs, significantly enhancing their capabilities and allowing for more complex and practical applications in various domains.

Integrating Function Calling with Other AI Techniques

Function Calling and Retrieval Augmented Generation (RAG)

Function calling and Retrieval Augmented Generation (RAG) are two powerful techniques that can be combined to enhance the capabilities of Large Language Models (LLMs). While function calling enables LLMs to interact with external tools and APIs, RAG allows models to pull in real-time, contextually relevant information from external knowledge sources . This combination creates AI solutions that can both generate highly accurate content and perform complex actions .

For instance, RAG might retrieve the latest product specifications from a database, while function calling processes an order based on that information . This integration is particularly useful for applications that require up-to-date information and the ability to perform specific actions.

13F Research Assistant (Beta) is a GPT we built last year that was based on function calling to interact with 13F Filings. This tool is crafted to enhance the financial analysis process, offering rapid and well-informed responses to a broad range of questions related to 13F filings.

What Can the 13F Research Assistant Do?

– Decode the intricacies behind hedge fund and asset manager filings.

– Uncover trends and patterns in investment behavior.

– Respond to inquiries about portfolio holdings and temporal changes.

– Provide insights into the strategic approaches of leading financial institutions.

You can access the 13F Research Assistant GPT from here.

Combining Function Calling with Fine-Tuning

Fine-tuning an AI model for function calling can significantly enhance its ability to execute specific tasks with precision and reliability . This process involves training the model on a dataset that reflects the specific tasks it needs to perform, including examples of when and how to call external functions .

For example, a model fine-tuned for weather-related queries would be more adept at identifying relevant triggers for calling a weather API and integrating the returned data into its response . Tools like the Visual Function Calling Editor from FinetuneDB simplify this process, allowing users to manage and integrate external functions into fine-tuned models without complex coding.

Multi-Modal Function Calling

The potential of function calling extends beyond text-based interactions. With structural decoding, LLMs can handle multi-modal inputs, such as images and audio . This capability opens up new possibilities for applications in various fields:

- Image Analysis: LLMs can interpret and generate outputs based on visual data, enhancing applications in healthcare and security .

- Audio Processing: By integrating audio inputs, LLMs can transcribe and analyze spoken language, making them valuable in customer service and transcription services .

These advancements in multi-modal function calling allow LLMs to process and analyze data more effectively, providing insights that drive decision-making across different domains .

Conclusion

Function calling has brought about a revolution in the world of Large Language Models, expanding their abilities beyond simple text generation. This advancement has a significant impact on creating AI systems that can tackle complex tasks and provide more accurate, context-aware responses. By enabling LLMs to interact with external tools and APIs, function calling opens up new possibilities to develop more powerful and versatile AI solutions across various fields.

Looking ahead, the combo of function calling with other AI techniques like Retrieval Augmented Generation and fine-tuning promises to push the boundaries of what AI can do. This fusion allows for the creation of AI systems that can not only access up-to-date info but also perform specific actions based on that data. As this tech keeps evolving, we can expect to see more groundbreaking applications that blend natural language processing with real-world interactions, paving the way for more advanced and practical AI-driven solutions in numerous industries.