Today, businesses that listen to customers are winning more revenue, and in turn, are capturing more customers. Indeed, it is a vicious circle.

Processing information using advanced analytics algorithms is a go-to or quick solution for many business problems. Data is overflowing from various channels, including social media, click-streams, and connected devices. Organizations that implement data lakes can derive value from these unscathed data sources.

We’re going to together go through a basic tutorial comprising fundamental and essential facts of data lakes. The following narrative will bolster your understanding of data lakes and their role within an analytics solution.

What are data lakes, lake houses, and data warehouses? How do they compare?

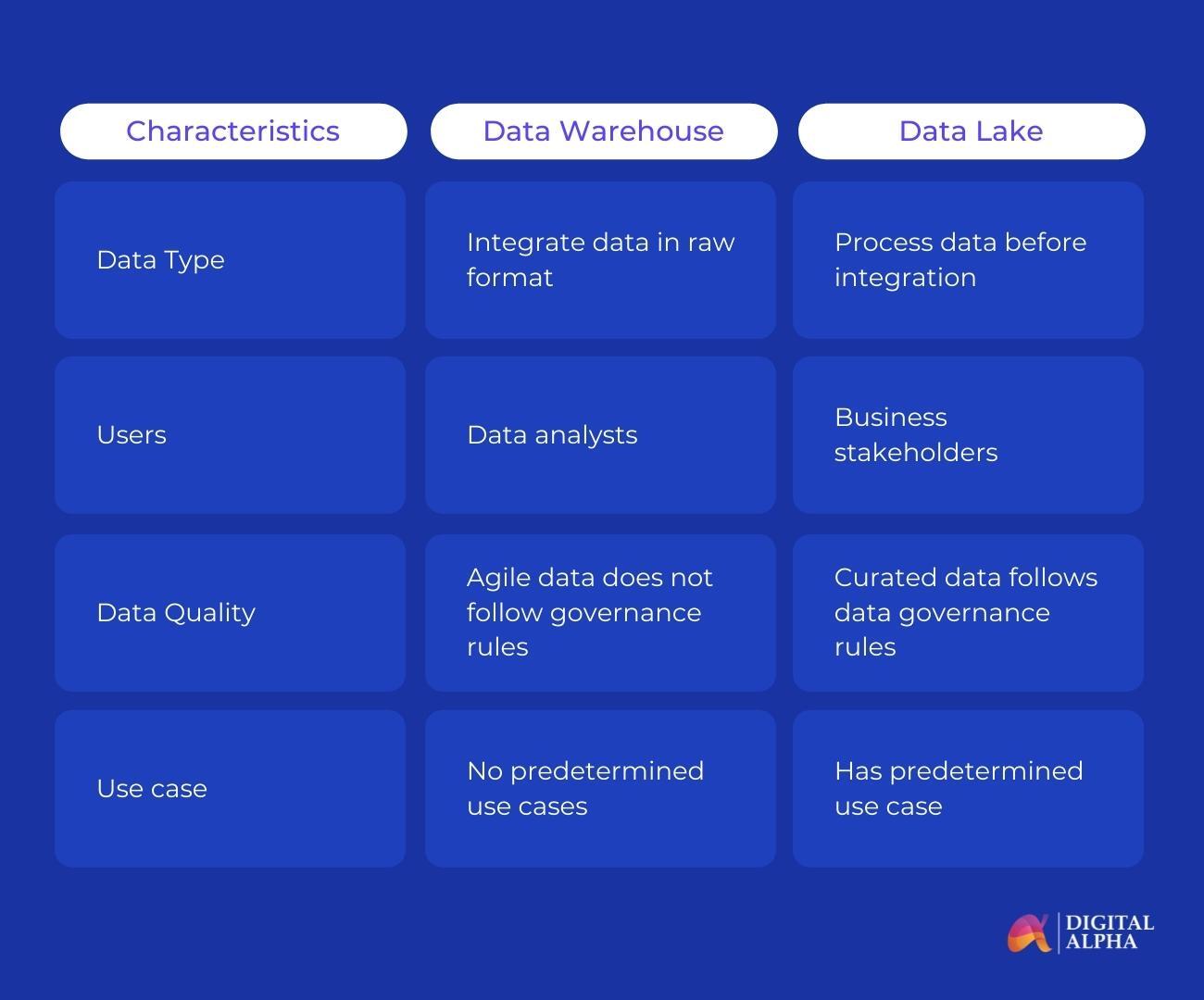

You could store both structured and unstructured data in data lakes. It is a large-scale repository that supports both basic visualizations and advanced machine learning (ML) methods. It is a centralized place for all of your raw, non summarized, and unedited data. Its size can vary from terabytes to petabytes.

Data warehouses come with a defined structure and fixed schema. They are capable of storing and analyzing only structured or relational data. Also data cleansing and transformation happen at fixed periods. It helps in better reporting and analysis.

Data lakehouse is a framework or architecture that enables both business users and data scientists to make meaning of the massive data stored in data lakes. It also enhances the scope of data warehouse and data lake supporting both AI (artificial intelligence) and BI (business intelligence) all at once.

Data Lake Architecture

The two main characteristics of data lake include durability and scalability. It should act as a versatile and stable storage layer. It should support and offer APIs and interfaces for better data accessibility and movement. Setting permissions or implementing encryption on stored datasets is seamless and plausible. Indexing, cataloging, and creating storage-value or tagging improves the ability to locate and process data.

Companies go through four different stages for a holistic data lake development or foundation. Fundamentally, the data lake comprises five constituents, including the ingestion layer, distillation layer, processing layer, operations segment, and insights layer. The ingestion layer describes the real-time streaming data sources or batch data buckets. Integrating advanced analytics platforms using an insights layer helps in retrieving actionable insights is derived in minutes. For process optimization and workflow management, the operations tier is helpful.

The need for new data lake architecture is two-fold —

- Existing architecture locks the data. Data migration is very costly.

- Even leading ML systems give sub-optimal outputs due to mustiness.

Upsides and Downsides of Data Lake

Adding consistency and governance to the data lake is essential for processing the raw data. Situations like data swamps are least considerate and hence should be avoided. Research teams can benefit from data lakes as they can test n number of test cases, hypotheses, or conjectures. Customer experience data like marketing, CRM will empower and expand the value that data lake beholds.

The Ending Note

Although relational databases and structured data analysis were the norms of the early days. As the internet age matured, too many data silos came into play, and that’s why unstructured data analysis became the need of the hour. These distributed data computing systems are actual game-changers.